🚀 Speed up NPM/Yarn install in Gitlab2020-04-22

This article is the sum of my findings in scope of Gitlab Issue that’s still unresolved at this moment (April 2020). In short, when the node_modules becomes large Gitlab is experiencing huge performance bottleneck due to great amount of files it needs to archive and upload during caching procedures. I have came up with few ways to improve caching performance.

At RingCentral we use runners and local S3 cache server, we have 120K files after yarn install of one of our monorepos, with overall size about 400–600 megabytes. Monorepo is a git repository which has multiple packages at once, packages can have own dependencies and usually are linked together. This is the worst case scenario since amount of Node modules can become insanely big. But this article can help you to deal with regular repositories too if they have lots of dependencies.

Advice zero would be to use Yarn Workspaces to deal with monorepo instead of Lerna, as latter is 2–3 times slower. Install and caching phases timings roughly are:

- Cache download takse about 1 minute

- Cache create 4 minutes (zipping of hundreds of thousands files)

- Cache upload 1 minutes

- Bare Yarn install 3 minutes

- Yarn install on top of cache 1 minute

This is our baseline, now let’s analyze how we can speed things up.

Solution 1: Create cache in first job, run others with read-only cache

before_script:

- yarn install

cache:

key: $CI_PROJECT_ID

policy: pull

untracked: true

install:

stage: install

script: echo 'Warming the cache'

cache:

key: $CI_PROJECT_ID

policy: pull-push

paths:

- .yarn

- node_modules

- 'packages/*/node_modules'

lint:

stage: test

script: yarn run lint:all

Key measurements:

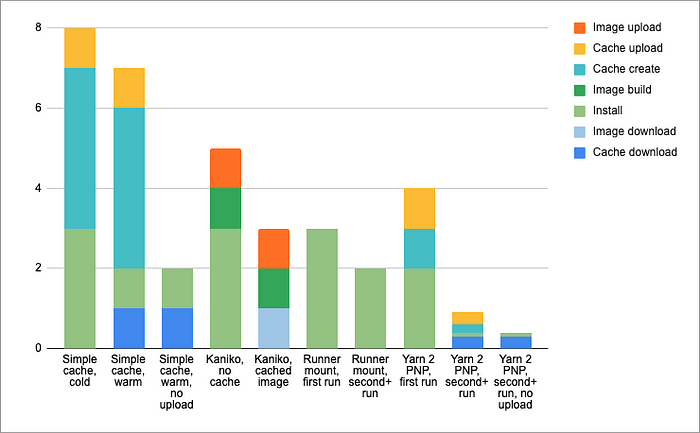

- Install, cache create & upload: 3+4+1 = 7 min

- Download, install, skip upload: 1+1 = 2 min

Best case scenario without cache upload it actually speeds things up a lot. It’s OK to waste time in ONE job to create and upload cache, if you have many other jobs that only download.

Given cache create+upload takes 5min, and Yarn install with cache saves us (3min pure install - 1min cached install) = 2min, it means that at least two jobs must successfully download cache and utilize it in Yarn install to justify time spent on uploading. More jobs use the cache — the better.

Solution 1, but with per-job cache for selected jobs

Down side is that cache can’t be configured “per-job” since you can have only one job that uploads cache**.** If you need to cache something else besides node_modules you're stuck. And unfortunately, jobs like ESLint spend more time on actual analysis than on installation etc., so caching those artifacts is even more important than node_modules.

Solution for this could be to disable Yarn workspaces for such jobs, only top level packages will be installed, which allowed to cut Yarn install time from 3.5min to ~1min, so no need to cache node_modules at all, just cache ESLint artifacts.

lint:

stage: test

before_script:

- sed -i 's/workspaces/workspaces-disabled/g' package.json

- yarn install --no-lockfile

- sed -i 's/workspaces-disabled/workspaces/g' package.json

script: yarn lint

cache: # here we use per-job cache

key: $CI_COMMIT_REF_NAME-lint

paths:

- .eslint

Solution 2: Build a docker image and use it in other jobs

Since each job is running in a container we can build an image for the container as first step. We will be using Kaniko because it is faster than regular Docker-in-Docker (DIND):

stages:

- docker

- build

- test

variables:

IMAGE_TAG: $CI_REGISTRY_IMAGE:$CI_COMMIT_REF_NAME

docker:

stage: docker

image:

name: gcr.io/kaniko-project/executor:debug

entrypoint: ['']

script:

- echo "{\"auths\":{\"${CI_REGISTRY}\":{\"username\":\"${CI_REGISTRY_USER}\",\"password\":\"${CI_REGISTRY_PASSWORD}\"}}}" > /kaniko/.docker/config.json

- time /kaniko/executor --context="${CI_PROJECT_DIR}" --dockerfile="${CI_PROJECT_DIR}/Dockerfile" --destination="${IMAGE_TAG}" --cache=true

build:

image: '${IMAGE_TAG}'

stage: build

script: yarn build

artifacts:

paths:

- 'packages/*/lib' # all artifacts that are used by tests

variables:

GIT_STRATEGY: none

lint:

image: '${IMAGE_TAG}'

before_script: cd /opt/workspace

script: yarn lint

variables:

GIT_STRATEGY: none

cache: # here we use per-job cache

key: $CI_COMMIT_REF_NAME-lint

paths:

- .eslint

We’re using Gitlab’s own Container Registry via environment variables. But you can use Docker Hub or anything else too, just define your own ENV variables.

We can skip the git checkout (GIT_STRATEGY: none) since the code is already a part of image.

In order to get maximum optimization we will build a special image manually (as described in the article https://medium.com/@dSebastien/speeding-up-your-ci-cd-build-times-with-a-custom-docker-image-3bfaac4e0479) and push it from local computer to registry once in a while. Dependencies of this base image may become outdated, so on CI we will use base image just as a foundation for real image which will be used in jobs. Pre-warmed cache of the base image will help to speed up build of the second one.

Let’s create a shared.Dockerfile for the base image.

FROM ringcentral/web-tools:alpine

WORKDIR /opt/workspace

ADD yarn.lock package.json lerna.json ./

# Note!

# Add one by one, you can't use glob because ofthis bug in Docker

ADD packages/a/package.json packages/a/package.json

ADD packages/b/package.json packages/b/package.json

ADD packages/c/package.json packages/c/package.json

RUN yarn install--link-duplicates

You can build and push this image like so:

$ docker login YOUR-GITLAB-OR-OTHER-REGISTRY

$ docker build --tagSOMENAME --fileshared.Dockerfile .

$ docker tag SOMENAMESOMENAME:latest

$ docker push SOMENAME:latest

This will require a DOCKERFILE, in which we will do pretty much the same but it would be based n the previous image:

FROM SOMENAME:latest

WORKDIR /opt/workspaceADD yarn.lock package.json lerna.json ./

# Note!

# Add one by one, you can't use glob because ofthis bug in Docker

ADD packages/a/package.json packages/a/package.json

ADD packages/b/package.json packages/b/package.json

ADD packages/c/package.json packages/c/package.json

RUN yarn install --link-duplicates # this cuts size of node_modules

ADD . ./

# now we add all the files

RUN rm -rf $(yarn cache dir)

Make sure to have .dockerignore and ban all node_modules and build artifacts, otherwise your image will become too huge.

In our case timings were:

- Install step of docker build on CI: 3 min

- Image upload: 1 minute

- Image download: 1.5 minutes

Which gives similar time as solution 1: Kaniko build job overall takes 5 min, regular about 6–7 min.

Overall looks like a viable approach for heavy jobs that do require full install. Image download + quick install (1.5 min+1 min) take less time than full Yarn install (3.5 min) or Gitlab cache download, so having image is beneficial.

For quick jobs like ESLint, which does not require full install of monorepo, just top-level, it is an overkill though, since these jobs won’t need all dependencies anyway, but that’s micromanagement…

Solution 3: Mount global Yarn cache to runner & don’t use Gitlab cache for node_modules

Gitlab jobs are running in Docker containers so we can mount a directory used by Yarn to globally cache packages in container to a directory in the runner. This will make any container to always have pre-warmed global Yarn cache. Unfortunately, this saves time on network downloads, but Yarn still copy tons of files into place during install.

To do that we need to add following to config.toml:

# /etc/gitlab-runner/config.toml

[[runners]]

...

[runners.docker]

volumes = ["/cache:/usr/local/share/.cache:rw"

After that disable caching of node_modules in all jobs.

This produced steady 2 minutes installation time, and now you can cache individual artifacts per-job. You can speed it up by using top-level installs for certain jobs too.

stages:

- docker

- build

- test

image: node:lts

before_script:

- yarn install

build:

stage: build

script: yarn build

artifacts:

paths:

- 'packages/*/lib' # all artifacts that are used by testslint:

stage: test

script: yarn lint

cache: # here we use per-job cache

key: $CI_COMMIT_REF_NAME-lint

paths:

- .eslint

Solution 4. Yarn 2 with PNP

Yarn 2 with Plug’n’play remediates caching issue dramatically: https://dev.to/arcanis/introducing-yarn-2-4eh1.

You even can use regular cache because it’s still fast enough, Yarn’s cache contains zip files instead of thousands of small sources. And install itself is much faster because Plug’n’play eliminates the time spent on copying from cache to workspace.

This approach requires quite a lot of effort to resolve all the bugs of early software, but as a reward you can use the cache as intended:

stages:

- docker

- build

- test

image: node:lts

before_script:

- yarn install # here we can use one cache for all jobs

cache:

paths:

- node_modules

- 'packages/*/node_modules'

- .eslint

build:

stage: build

script: yarn build

artifacts:

paths:

- 'packages/*/lib' # all artifacts that are used by teststest:

test:

stage: test

script: yarn test

Speed is amazing. First time w/o cache it takes same 1–2 minutes, but cache creation and upload take ~20 seconds each. But magic happens on next installs, when cache is warm: install takes 10 seconds (!!!), cache download same ~20 seconds. If we disable cache upload overall freeload on subsequent jobs is just 30 seconds!!!

Summary

As you see, worst performance is regular Gitlab Cache. Even quite fast execution with blocked cache uploads does not justify the performance failure on creation and upload.

Second comes Kaniko. Quite fast overall, faster w/o cached image, but slower on subsequent jobs. Requires juggling with Docker images. Allows to have cache per job.

Simple idea to mount global Yarn cache to runner resulted in surprisingly good and stable results, a bit worse than regular Gitlab cache but it depends how many subsequent jobs you have. If not many then it’s your best bet.

But the absolute winner undoubtedly is Yarn 2 with PNP. It’s much faster on first run, and 30 seconds on subsequent jobs is the best thing ever. And you can make it even faster if you commit it’s local cache ;)